Convex Optimization Techniques

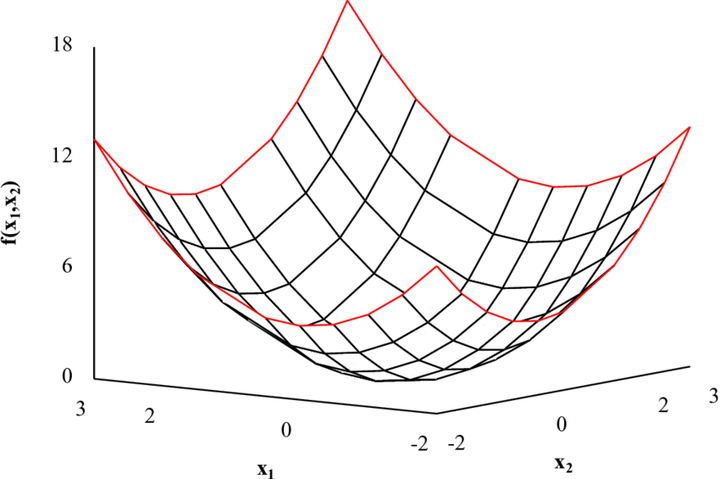

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. We started by studying the three parameter Weibull distribution and wrote a python script to maximize its likelihood function. We then got familiar with Batch Gradient Descent, Stochastic Gradient Descent and Mini-Batch Gradient Descent. We also explored several Matrix Factorization methods such as LU and PLU Factorization for basis B, Cholesky Factorization LLT and LDLT for Symmetric, Positive Definite Matrices B, etc. Further, we explored optimization techniques such as Adagrad, RMSProp, AdaMax, Adam, etc. We also implemented each of these techniques in python to visualize the differences.