Language Models

I took up this project under Programming Club, IIT Kanpur. This was my first introduction to language models and the summary is as follows -

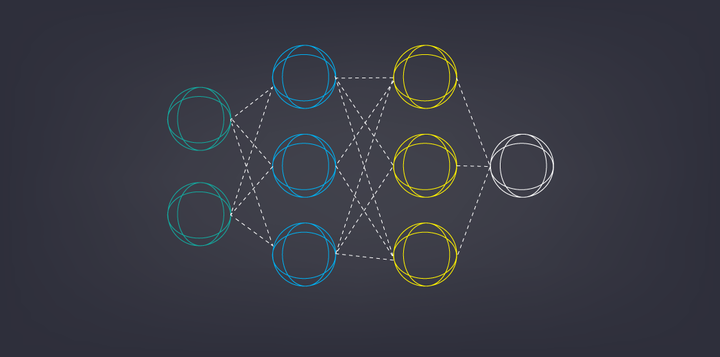

- Got me familiarized with Deep Neural Networks by implementing the basic types of neural networks

- Learnt about the basics of Natural Language Processing and some common applications of sequence models with focus on word embeddings such as GloVe, Word2Vec, BERT, etc.

- Implemented a SOTA paper on ELMo’s (Embeddings for Language Models) in Python using Pytorch that achieved 83% efficiency which is close to 85.8% SOTA values